What's the evidence for this claim? Let's start by briefly explaining what the H-index is. It's computed by rank ordering a set of publications in terms of their citation count, and identifying the point where the rank order exceeds the number of citations. So if a person has an H-index of 20 this means that they've published 20 papers with at least 20 citations – but their 21st paper (if there is one) had fewer than 21 citations.

The reason this is reckoned to be relatively impervious to gaming is that authors don't, in general, have much too much control over whether their papers get published in the first place, and how many citations their published papers get: that's down to other people. You can, of course, cite yourself, but most reviewers and editors would spot if an author was citing themselves inappropriately, and would tell them to remove otiose references. Nevertheless, self-citation is an issue. Another issue is superfluous authorship: if I were to have an agreement with another author that we'd always put each other down as authors on our papers, then both our H-indices would benefit from any citations that our papers attracted. In principle, both these tricks could be dealt with: e.g, by omitting self-citations from the H-index computation, and by dividing the number of citations by the number of authors before computing the H-index. In practice, though, this is not usually done, and the H-index is widely used when making hiring and promotion decisions.

In my previous blogpost, I described unusual editorial practices at two journals – Research in Developmental Disabilities and Research in Autism Spectrum Disorders – that had led to the editor, Johnny Matson achieving an H-index on Web of Science of 59. (Since I wrote that blogpost it's risen to 60). That impressive H-index was based in part, however, on Matson publishing numerous papers in his own journals, and engaging in self-citation at a rate that was at least five times higher than typical for productive researchers in his field.

It seems, though, that this is just the tip of a large and ugly iceberg. When looking at Matson's publications, I found two other journals where he published an unusual number of papers: Developmental Neurorehabilitation (DN) and Journal of Developmental and Physical Disabilities (JDPD). JDPD does not publish dates of submission and acceptance for its papers, but DN does, and I found that for 32 papers co-authored by Matson in this journal between 2010 and 2014 for which the information was available, the median lag from a paper being received and it being accepted was one day. So it seemed a good idea to look at the editors of DN and JDPD. What I found was a very cosy relationship between editors of all four journals.

Figure 1 shows associate editors and editorial board members who have published a lot in some or all of the four journals. It is clear that, just as Matson published frequently in DN and JDPD, so too did the editors of DN and JDPD publish frequently in RASD and RIDD. Looking at some of the publications, it was also evident that these other editors also frequently co-authored papers. For instance, over a four-year period (2010-2014) there were 140 papers co-authored by Mark O'Reilly, Jeff Sigafoos, and Guiliano Lancioni. Interestingly, Matson did not co-author with this group, but he frequently accepted their papers in his journals.

Figure 2 shows the distribution of publication lags for the 140 papers in RASD and RISS where authors included the O'Reilly, Sigafoos and Lancioni trio. This shows the number of days between the paper being received by the journal and its acceptance. For anything less than a fortnight it is implausible that there could have been peer review.

|

| Figure 2 Lag from paper received to acceptance (days) for 73 papers co-authored by Sigafoos, Lancioni and O'Reilly, 2010-2014 |

Papers by this trio of authors were not only accepted with breathtaking speed: they were also often on topics that seem rather remote from 'developmental disabilities', such as post-coma behaviour, amyotrophic lateral sclerosis, and Alzheimer's disease. Many were review papers and others were in effect case reports based on two or three patients. The content was so slender that it was often hard to see why the input of three experts from different continents was required. Although none of these three authors achieved Matson's astounding rate of 54% self-citations, they all self-cited at well above normal rates: Lancioni at 32%, O'Reilly at 31% and Sigafoos at 26%. It's hard to see what explanation there could be for this pattern of behaviour other than a deliberate attempt to boost the H-index. All three authors have a lifetime H-index of 24 or over.

One has to ask whether the publishers of these journals were asleep on the job, not to notice the remarkable turnaround of papers from the same small group of people. In the Comments on my previous post, Michael Osuch, a representative of Elsevier, reassured me that "Under Dr Matson’s editorship of both RIDD and RASD all accepted papers were reviewed, and papers on which Dr Matson was an author were handled by one of the former Associate Editors." I queried this because I was aware of cases of papers being accepted without peer review and asked if the publisher had checked the files: something that should be easy in these days of electronic journal management. I was told "Yes, we have looked at the files. In a minority of cases, Dr Matson acted as sole referee." My only response to this is, see Figure 2.

Reference

Hirsch, J. (2005). An index to quantify an individual's scientific research output Proceedings of the National Academy of Sciences, 102 (46), 16569-16572 DOI: 10.1073/pnas.0507655102

P. S. I have now posted the raw data on which these analyses are based here.

P.P.S. 1st March 2015

Some of those commenting on this blogpost have argued

that I am behaving unfairly in singling out specific authors for criticism.

Their argument is that many people were getting papers published in RASD

and RIDD with very short time lags, so why should I pick on Sigafoos, O'Reilly,

and Lancioni?

I should note first of all that the argument 'everyone's

doing it' is not a very good one. It would seem that this field has some pretty

weird standards if it is regarded as normal to have papers published without

peer review in journals that are thought to be peer-reviewed.

Be this as it may, since some people don't seem to have understood the blogpost, let me state more explicitly the reasons why I have singled out these

three individuals. Their situation is different from others who have achieved

easy reviewer-free publication in that:

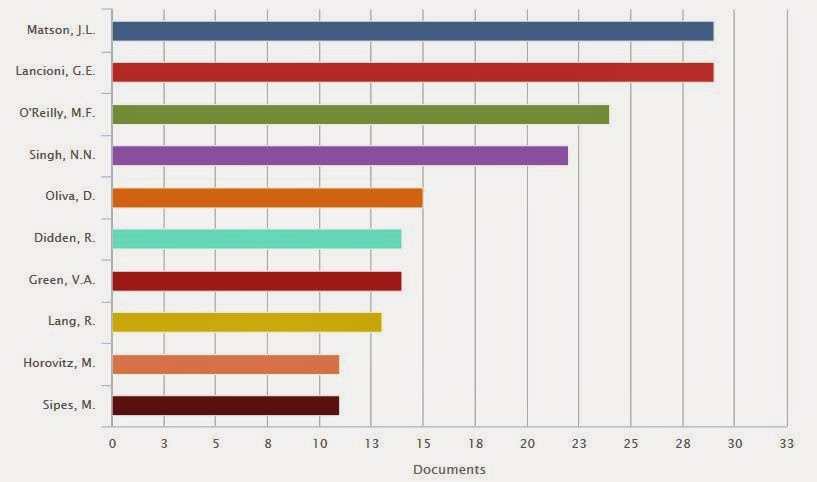

1. Their publications don't only seem to get into RIDD and

RASD very quickly; they also are of a quite staggering quantity – they eclipse

all other authors other than Matson. It's easy to get the stats from Scopus, so

I am showing the relevant graphs here, for RIDD/RASD together and also for the

other two journals I focused on, Developmental Neurorehabilitation and JDPD.

| ||

| Top 10 authors RASD/RIDD 2010-2014: from Scopus |

| |

| Top 10 authors 2010-2014: Developmental Neurorehabilitation (from Scopus) |

|

| Top 10 authors 2010-2014: JDPD (from Scopus) |

2. All three have played editorial roles for some of these four

journals. Sigafoos was previously editor at Developmental Neurorehabilitation,

and until recently was listed as associate editor at RASD and JDPD. O'Reilly is editor of JDPD, is on the

editorial board of Developmental Neurorehabilitation and was until 2015 on the

editorial board of RIDD. Lancioni was

until 2015 an associate editor of RIDD.

Now if it is the case that Matson was accepting papers for

RASD/RIDD without peer review (and even my critics seem to accept that was

happening), as well as publishing a high volume of his own papers in those

journals, then that is something that is certainly not normally accepted

behaviour by an editor. The reasons why journals have editorial boards is

precisely to ensure that the journal is run properly. If these editors were

aware that you could get loads of papers into RASD/RIDD without peer review,

then their reaction should have been to query the practice, not to take

advantage of it. Allowing it to continue has put the reputation of these journals at risk. You might say why didn't I include other associate editors or

board members? Well, for a start none of them was quite so prolific in using

RASD/RIDD as a publication outlet, and, if my own experience is anything to go

by, it seems possible that some of them were unaware that they were even listed

as playing an editorial role.

Far from raising questions with Matson about the lack of

peer review in his journals, O'Reilly and Sigafoos appear to have encouraged

him to publish in the journals they edited. Information about publication lag

is not available for JDPD; in Developmental

Neurorehabilitation, Matson's papers were being accepted with such lightning speed

as to preclude peer review.

Being an editor is a high status role that provides many

opportunities but also carries responsibilities. My case is that these were not

taken seriously and this has caused this whole field of study to suffer a major

loss of credibility.

I note that there are plans to take complaints about my

behaviour to the Vice Chancellor at the University of Oxford. I'm sure he'll be

very interested to hear from complainants and astonished to learn about what

passes for acceptable publication practices in this field.

P.P.P.S 7th March 2015

I note from the comments that there are those who think that I should not criticise the trio of Sigafoos, O'Reilly and Lancioni for having numerous papers published in RASD and RIDD with remarkably short acceptance times, because others had papers accepted in these journals with equally short lags between submission and acceptance. I've been accused of cherry-picking data to try and make a case that these three were gaming the system.

As noted above, I think that to repeatedly submit work to a journal knowing that it will be published without peer review, while giving the impression that it is peer-reviewed (and hence eligible for inclusion in metrics such as H-index) is unacceptable in absolute terms, regardless of who else is doing it. It is particularly problematic in someone who has been given editorial responsibility. However, it is undoubtedly true that rapid acceptance of papers was not uncommon under Matson's editorship. I know this both from people who've emailed me personally about it, and there are also brave people who mention this in the Comments. However, most of these people were not gaming the system: they were surprised to find such easy acceptance, but didn't go on to submit numerous papers to RASD and RIDD once they became aware of it.

So should I do an analysis to show that, even by the lax editorial standards of RASD/RIDD, Sigafoos/O'Reilly/Lancioni (SOL) papers had preferential treatment? Personally I don't think it is necessary, but to satisfy complainants, I have done such an analysis. Here's the logic. If SOL are given preferential treatment, then we should find that the acceptance lag for their papers is less than for papers by other authors published around the same time. Accordingly, I searched on Web of Science for papers published in RASD during the period 2010-2014. For each paper authored by Sigafoos, I took as a 'control' paper the next paper in the Web of Science list that was not authored by any of the six individuals listed in Table 1 above, and checked its acceptance lag. There were 20 papers by Sigafoos: 18 of these were also co-authored by O'Reilly and Lancioni, and so I had already got their acceptance lag data. I added the data for the two additional Sigafoos papers. For one paper, data on acceptance lag was not provided, so this left 19 matched pairs of papers. For those authored by Sigafoos and colleagues, the median lag was 4 days. For papers with other authors, the median lag was 65 days. The difference is highly significant on matched pairs t-test; t = 3.19, p = .005. The data on which this analysis was based can be found here.

I dare say someone will now say I have cherrypicked data because I only analysed RASD and not RIDD papers. To that I would reply, the evidence for preferential treatment is so strong that if you want to argue it did not occur, it is up to you to do the analysis. Be warned, checking acceptance lags is very tedious work.

At this point, should we be asking Elsevier to retract any of Matson & his co-authors' articles where this same group of people with conflict of interests served as the only reviewers for each others' articles?

ReplyDeleteYes, I think we should.

DeleteI agree - I think Elsevier should retract not just Matson's papers but those cited above.

Delete140 papers in 4 years.....Do we have rough numbers for others in similar fields and positions to see how the number compares?!

A somewhat similar case here http://scholarlykitchen.sspnet.org/2012/04/10/emergence-of-a-citation-cartel/ leading to ISI impact factor ban http://scholarlykitchen.sspnet.org/2012/06/29/citation-cartel-journals-denied-2011-impact-factor/

ReplyDeleteI think it would be great if those providing and using the h-index could switch to excluding self-citations by default (unfortunately this seems not to be possible with Google Scholar profiles, though it is with Scopus as well as Web of Science).

ReplyDeleteDividing citation counts by number of authors is an interesting idea, but seems more problematic to me, partly because the effect is much greater for an additional author when there are few authors; for example, a PhD student and their PI might be reluctant to have a helpful postdoc as a third author on their otherwise two-author paper, while a large consortium wouldn't care too much about giving gift authorship to a senior figure.

Unfortunately, even with both changes, groups of unscrupulous authors could still game the system by writing many single-author papers that cite each other's many single-author papers.

I think the most important single improvement to the system would be for articles only to get indexed (and counted in bibliometrics) if they meet certain minimal peer-review standards, e.g. at least two reviewers (ideally not counting the editor). The indexing agencies should have the right to ask for evidence of this for randomly sampled papers (which they would keep confidential). Removing the many editorials that are not peer-reviewed would also help to avoid the situation that Rogier links to above.

@Ged Ridgway

Delete"Minimal peer-review standards" will not help. Unscrupulous people can still easily game it. In your example of at least two reviewers it requires a gang of 4 people (an author, an editor and 2 reviewers). As we see from the above blog post, this particular gang already has more than enough people to game that "minimal peer review standards" system.

"Minimal peer review standards" will only make things harder for honest people, unscrupulous people will have no problems with it.

The only thing that helps is naming and shaming.

Sigafoos was associate editor of RASD and now runs a journal called "Evidence-based Communication Assessment and Intervention." There are other members of this group who publish with O'Reilly et al., including Wendy Machalicek, Mandy Rispoli, and Nirbhay Singh.

ReplyDeleteMatson, O'Reilly, Lancioni, and Sigafoos have been publishing for a while, prior to their editorship of these journals.

DeleteBut their less senior publishing colleagues, some of whom are former students, have basically been groomed in this "model" of scholarship, with half or more of their total publications in these four journals. For example, of the first 52 publications for Russell Lang that appear in Google Scholar (he has many more), 29 were published in RASD, RIDD, and DN, all with very short review times and the large majority with no evidence of revision. In other words, without peer review.

I am also concerned about the very short review times, but it is an issue to publish primarily in a handful of journals? I always have the same few in my mind when I submit.

DeleteIf you are in a small field, then it may be that there are limited options for journals, so having a small range of outlets is not necessarily a problem. However, if an editor publishes in their own journal, then it is important to have a robust system for ensuring that there is adequate peer review.

DeleteI can't say I'm surprised given that LSU has a culture of vita fraud, at least it did when I was there a few years ago. Apparently, if all this about Johnny Matson hold up, it's only getting worse. Promotion review committees simply turn a blind eye to vitas that contain such egregious misrepresentations as textbooks listed as research monographs, letters to the editor of Nature listed as peer reviewed articles in Nature, essays in society news letters listed as peer reviewed articles, and yes, as this case so ably demonstrates, editors of journals publishing in their own journals and claiming the articles are peer reviewed pubs. Why are the committees and deans complicit? I am guessing that they are either so unaccomplished themselves that they don't know how to spot obvious clunkers on a vita or they don't dare snitch for being found out themseleves....

ReplyDeleteLSU seems to be a hotbed of academic fraud. A few months ago an English prof who used to be at LSU was fired from Uni. of Nevada at Las Vegas for career-long plagiarism. Just search for "Mustapha Marrochi UNLV LSU plagiarist." Apparently he had been plagiarizing copiously since his dissertation but was hired, tenured and promoted to full prof by LSU committees and deans. Then he got a highly paid endowed position at UNLV. But someone there finally got his number, and they fired him. Major egg on LSU's face--yet again.

ReplyDeleteThis is a witch hunt. LSU is a great university.

ReplyDelete"Witch hunt" means the persecution of a group of people because of their unconventional behavior. On the basis of the data and analysis presented in this blog and reported on in highly reputed media such as the Chronicle of Higher Education, a great university would indeed consider such behavior unconventional. Moreover, it would consider such behavior unacceptable and indeed be proactive about identifying, investigating, and disciplining such unscholarly scholars.

DeleteMaybe apply some of the same scrutiny to this blog post that you are carelessly accusing people left and right of not using in the peer review process.

ReplyDeleteA lot of people in the field have reviewed this blog post extremely carefully and are highly disturbed by all of it and, especially, figure 2. It indicates that Matson and his collaborators are claiming they are publishing peer reviewed articles when in fact they are not being peer reviewed. That is not only dishonest, if true, but warrants full investigation by their universities and funding agencies.

DeleteYour comment is too vague to be of any use. Please specify which parts of my blogpost are in error and I will correct them.

DeleteI think it should be noted that many of the studies are not simply "case reports on two or three patients," but studies that use single case design to track behavioral outcomes. In single case design, each subject functions as his or her own control, and specific procedures are used to establish experimental control. This type of methodology is very frequently used in the field of Special Education, and has a long history that stems from the early behavioral studies. It is particularly useful when the population being studied has a low incidence, when participants are not readily available for large group studies. Single case design studies have yielded a great deal of the knowledge we have about effective behavioral interventions for children with autism and other developmental disabilities. It is so commonly used in the field of special education that any American PhD student in SPED would be expected to have had at least one course in this methodology. Additionally, single case studies can often be conducted quickly and with no grant funding, so it is feasible to get several done over the course of a few years. 140 papers split by 4 authors and 4 years means that each author would need to publish 7 to10 single subject studies a year. This is high, for sure, but certainly feasible for a single subject researcher that has a research infrastructure going and graduate students that are running several different studies.

ReplyDeleteAlso, I looked up a few of the studies on ALS, and they use single case design to track the intervention effectiveness of assistive technology that would certainly be useful for persons with developmental disabilities, so it was not entirely irrelevant to the journal.

I'm not saying that wrongdoing hasn't occurred, just clearing up some issues around some of the evidence Dorothy Bishop has put forth and perhaps misinterpreted. She can certainly be forgiven for not knowing about these things, as it is slightly outside of the realm of her area of expertise.

I'm not an expert. But I think the most shocking thing worthy of note is Figure 2...

DeleteAgreed. The acceptance time is really quite disturbing.

DeleteWe have known for a long time that these researchers were producing tons of papers. The problem, however, is that if they weren't undergoing standard peer review (and were instead being accepted the same day as they were submitted with no revisions the majority of the time), that their work just simply didn't undergo the same scrutiny as everyone else. It is easy to publish 50 papers a year if you can say anything you want and no one ever truly reads your paper in a real peer review process. If your friends are just accepting all your papers, that's a different system than everyone else uses to publish papers. The time from writing to submission could be short, but who else has all of their papers peer reviewed within 1 day of submission and is accepted with no revisions at all?

DeleteA lot of people. Search for yourself.

DeleteEven for Nature (Research articles and letters that undergo peer review), their stated ideal peer review time is going to be at least 2 weeks (1 week for an internal decision to send it out to review or reject, and a second week for the reviewers to complete their peer reviews).

DeleteAny turn-around from submission to acceptance to any journal that is shorter than 1 week likely means that they were likely not reviewed by a person other than one of the journal editors. When you are the journal editor, short times for you and your friends will be particularly suspect because it suggests that they were only being reviewed by the editor's friends who were also getting accepted articles with no peer review. Even for nature, the time between submission and final acceptance is still mostly on the order of a few months for things they publish (e.g., they reject quickly but the full process is still time-consuming for those they publish). For nature, all the things published in their current issue took at least a month to complete the peer review process. We don't mind quick rejections, but the RADD articles in Figure 2 just aren't being sent out for outside peer review.

If "a lot of people" aren't having their work peer reviewed, that is a huge issue for scientists that we need to solve. People behaving badly shouldn't have to be accepted just because others behave badly: http://www.nature.com/news/publishing-the-peer-review-scam-1.16400

I do wish people would put their names to Comments. But given that I've got all these Anonymice to deal with, this is in reply to the person who said "140 papers split by 4 authors and 4 years means that each author would need to publish 7 to10 single subject studies a year."

DeleteNope, that's not what the blog states. There were 140 papers over 4 years in which all three authors - Sigafoos, O'Reilly and Lancioni - were co-authors, usually accompanied by other coauthors.

So these guys produced 35 papers per year on average. That's roughly one every 10 days.

Matson produced an additional 225 papers over that 4 year period, i.e. around one per week (with various co-authors, many of whom appear to have been students). He somehow did this despite also being responsible for acting as editor for the high volume of papers submitted to RASD and RIDD.

I am aware that single case reports are a widely-used method in some fields. However, that doesn't really address the problem here, which is that:

a) Matson has been publishing a lot of work in his own journals.

b) He has a very high rate of self-citation

c) He also publishes in Developmental Neurorehabilitation, (previously edited by Sigafoos) but the lag between submission and acceptance indicates that his papers published there could not have been peer reviewed.

d) Papers by the Sigafoos/O'Reilly/Lancioni trio appear to have been published in RASD/RIDD, edited by Matson, without peer review - again, going by the published information on lag between submission and acceptance.

One of these points alone would look bad. All four in combination look very bad indeed.

I am finding many many names of people with acceptance time less than 3 or 4 days in RASD and RIDD. I don't want to post names in a witch hunt, but anyone can go look and see. The data DB collected were specific to this group of people. That seems odd.

Delete@Deevybee - Your reference to it as single case report and not "single subject research" or "single case design" belies an unfamiliarity with the methodology (and diminishes it to some extent), and distracts from your very good point about the obvious lack of peer review.

DeleteOut of curiosity I conducted a cursory overview of time lag between submission to acceptance of all papers published for one year of RIDD (one of the journals mentioned by Professor Bishop). Almost 50% of the articles published that year were accepted within 2 weeks of submission. A very small percentage of those papers accepted within 2 weeks of submission were by the authors mentioned above. So such rapid lag between submission and acceptance seems to be the norm in terms of editorial practice in RIDD. Of course it would be interesting if someone were to undertake the arduous task of looking at all volumes of RIDD and RASD mentioned above in the blog by Professor Bishop and systematically examine lag between submission and acceptance. My guess is that we will see a large percentage of papers accepted for publication in 2 weeks or less in both journals.

ReplyDeleteThis rapid review may be a general concern. However, editorial policy of this journal seems to clearly state (at least to me) that the editor has the authority to handle the review process himself. If people are concerned with such a practice then they should not submit to this journal. However, as I review the names of authors who have published in this journal I generate a list of some of the top researchers in intellectual disability in the UK such as Emerson, Oliver, Wing, Hastings etc. * I also noticed that some of the researchers mentioned above (Lancioni) have published large quantities of work in this journal for decades. Professor Bishop notes that there is no collaboration with regard to publications between Matson and Lancioni et al.

So what is going on here? It would appear from my cursory analysis that Professor Bishop has cherry picked a data set for a small group of researchers in order to create an illusion of collusion.

Perhaps we have a case of - Oxford Professor Behaving Badly?

*Wing, L., Gould, J., & Gillberg, C. (2011). Autism spectrum disorders in the DSM-V: Better or worse than the DSM-IV? Research in Developmental Disabilities, 32, 768-773. (Received October 29, 2010. Accepted November 5, 2010).

In response to OXFORD PROFESSOR BEHAVING BADLY comment above: That is a good question? Does Bishop have a rivalry or reason to dislike this group?

ReplyDeletelol, Maybe she was rejected from RIDD after a long wait and thats why she's so mad

ReplyDeleteNice try, but I don't submit to Elsevier journals so this doesn't apply. And if I were to take vengeance on all editors who had rejected my work, it'd take up a hell of a lot of time.

DeleteNope, this started when I was alerted to the fact I was listed on the editorial board of a Journal whose editor was behaving unusually, to say the least, and then developed from there.

This is nothing to laugh about: it's bad news for the numerous people who have, in good faith, published in these journals thinking they were reputable, peer-reviewed publications.

Nope. The only thing I knew about Matson before I became aware of this issue was good: he'd written a nice paper on diagnostic instruments that I'd cited. I'd never heard of Sigafoos, Lancioni or O'Reilly before I started looking at this. As explained above, Sigafoos and O'Reilly came to my attention because they had an editorial role in Developmental Neurorehabilitaiton/ Journal of Physical and Developmental Disabilities - 2 journals that published a lot of Matson papers with no indication of peer review. I looked at their publications and it was striking that they very frequently co-authored in a trio with Lancioni - who is also an associate editor at RIDD.

ReplyDeleteThe defence that it was USUAL for Matson to accept papers within 2 weeks in RASD/RIDD is no defence at all, and is totally at odds with what the publisher, Elsevier, says, both in their responses to my previous blogpost, and here on their website, where they say: "Reviewers play an essential part in research and in scholarly publishing. Elsevier, like most academic publishing companies, relies on effective peer-review processes to uphold the quality and validity of individual articles and the overall integrity of the journals we publish". http://www.elsevier.com/editors/peer-review

These journals are also listed in Web of Science by Thompson Reuters, one of whose criteria is that the journal is peer reviewed: see http://wokinfo.com/essays/journal-selection-process/

No doubt we will see what Thompson-Reuters makes of this in due course.

Why wouldn't you do that before you made accusations like these in a blog post? Wouldn't that have been more responsible?

ReplyDeleteI'm no Matson supporter, but it does strike me as curious that Professor Bishop has now selected a trio of researchers to focus in on...when hundreds of different researchers have similar publication rates/acceptance to print turn arounds in journals.

ReplyDeleteSeems like a targeted attack aimed to defame ABA scholars and perhaps ABA?

Someone should take a look at RIDD and RASD as a whole as the commenter above suggests...

Is someone doing this? I think we'd all love to see the entire picture.

DeleteShe's been very clear about her process. She just pulled journals that Matson was publishing in frequently and then looked at Editors of those journals. She has no reason to target ABA scholars or ABA. She had not even heard of most of these scholars prior to investigating.

DeleteSo she stumbled across what seemed like a hot finding and followed it to her conclusion? And now is ignoring counter evidence because of what? Sounds horrifically irresponsible when peoples' reputation is at stake.

DeleteThere hasn't yet been counter-evidence of the claim that potentially multiple editors at these journals were accepting articles that hadn't undergone real peer review. No one has actually provided evidence that all of the articles underwent peer review following best practices for peer review. This second post was based on follow-up conversations about how it was likely bigger than Matson and possibly extended to other people he collaborated with who would have to have known about the lack of peer review and may have had opportunities to profit from the lack of following peer review best practices. Following best practices for peer review is how we ensure ethics and honesty and build trust with other researchers and the community at large. The concern here is whether or not best practices for peer review and research ethics were upheld or not. It's a conversation worth having.

DeleteAll of these journals have different paper submission formats (brief reports, full papers, reviews, case studies, editorials, etc). In Informa Health Care’s online system, if a paper is changed from one format to another it gets a different manuscript number. A new manuscript number is also given if the authors make an error when they submit revisions (this happens more than you’d think) and when the authors take too long to submit revisions. The tracking system that puts the dates of submission on the first page of the papers is based on manuscript number. So, if the paper is changed from a full paper to a brief report, if the author makes an error or takes too long, that date you’re basing all of these accusations on is wrong. These blog posts (perhaps with good intentions) are based on bad data that you didn’t have peer reviewed before you posted it to the world.

ReplyDeleteIt is important to note that I do not know if Elsevier system works the same way as Informa's

DeleteProfessor behaving badly Bishop has misconstrued a data set with malicious intent. I’m sure this is not the first time that such behavior has occurred. I strongly suggest that professionals contact the Vice Chancellor for Research at Oxford University and her professional peers at the British Psychological Society and complain.

ReplyDeleteOn the contrary, I only wish that all scientists were like Bishop. I'm not brown nosing; I bet most people would agree with me. A cursory look at her blog posts clearly reveal a scientist with integrity. Whereas you, on the other hand, have made three assertions (Bishop has misconstrued a data set, with malicious intent, and that you're sure that this is not the first time such behaviour has occurred) with no evidence, and then make a ridiculous suggestion on the back of it. This is classic "troll" behaviour!

Deletemost reviewers and editors would spot if an author was citing themselves inappropriately, and would tell them to remove otiose references

ReplyDeleteYes, that would be nice. I'll leave this here (from Personality & Individual Differences):

http://4.bp.blogspot.com/-ZbjTZRfpQUM/VOpmLWM4YrI/AAAAAAAAQYs/4h4ao1sd5Jo/s1600/jonason.PNG

For Oxford Complaints:

ReplyDeleteCONTACT DETAILS

Vice-Chancellor's Office

University Offices

Wellington Square

Oxford

OX1 2JD

vcweb@admin.ox.ac.uk

Telephone: 01865 270252

Thanks, now I know where to send my note of support for Dr. Bishop's excellent work in exposing this situation!

DeleteMe too!

DeleteFor the British Psychological Society:

ReplyDeleteThe British Psychological Society

St Andrews House

48 Princess Road East

Leicester

LE1 7DR

Tel: +44 (0)116 254 9568

Fax: +44 (0)116 227 1314

Email: enquiries@bps.org.uk

I think, everyone needs to calm down. We should not be judging Dorothy Bishop on this, all she has done has offered an interesting and potentially very important debate with huge ramifications. By informing people of these mistakes that Dorothy Bishop made is going to have one a huge problem for the field of psychology since she is very well respected in her field. The most important thing I think we need to consider is this is not malicious intent. If it was, she would not be using evidence. She has calculated the self citation rates in accordance with other authors of that field and also she started looking into this as a result of her being accepted into the editorial board, when she was not told in advance. How does this make her malicious? She is not being malicious, but curious for science's reputation in order to publish the truth, not for personal gain. I think we need to consider that, it may seem aggressive but it isn't. She is trying to bring this situation to light before other people who may publish in this journal may be seen in a negative light. Therefore, if they are young junior lecturers, their reputation will not be tarnished. Same with the older lecturers. I actually applaud her for mentioning this.

DeleteDorothy, the reason I am keeping anonymous is that I do not want my career to begin poorly with enemies at the start, I am about to start my PhD and I do not want this yet. But, I applaud you for raising this issue and it needs to be dealt with swiftly.

Finally about her cherrypicking the data, it is wrong. But, unlike research in which you have a dependent variable that has a small and finite amount, there may be loads of researchers in the field of special education, intellectual disability that it will be difficult to find every single person who self cites or publishes their own work in a year without peer review.

I am making a list of people who have published in less than 2 weeks in RIDD and RASD. I have gone through 2 years, and the list is over 50 people long. Further, the amount of time a paper is in review when submitted by another group (i.e., not the people listed by name above) is really not different. I think it is worthwhile to have a conversation about different review policies and, perhaps about the specific policy used by Matson’s journals. However, I do not think there is any data to support favoritism for the group of authors in the table in RIDD and RASD.

DeleteI am posting anon because listing my name might me subject to attacks also. I do not want to post the list of names of people who have had fast decisions, but it is easy to obtain this information on the journal’s webpage.

If over 50 different authors had their work published without peer review and basically "everyone" was taking advantage of Matson's editorial policy, then we may be on the cusp of the largest set of retracted papers in the history of academia.

DeletePerhaps you could provide these data to Prof. Bishop so she could post it?

DeleteNothing poisons science like having a strong preferred outcome and Oxford Professor Behaving Badly Bishop is blatantly guilty of this. She misrepresented data to malign colleagues. Now her behavior is under scrutiny. I strongly suggest that people contact Times Higher Education that printed the Matson article and encourage them to write a piece on the now exposed charlatan at Oxford. The person who wrote the article contact details below:

ReplyDeletePaul.jump@tesglobal.com

You are out of control.

DeleteI am going to introduce the words "libel" and "harassment" into this conversation. Bishop has made a quantitative argument. Sam has made none.

DeleteThere are very few things more pathetic than a sock puppet throwing out laughably false claims in order to divert attention from their own behaviors.

DeleteDon't worry, there will be jobs at Fox News waiting for all of you when this is done.

In RASD, Matson's papers have some of the longest review times. Maybe the reviewers or AE he selected were inappropriate, as Bishop asserts, but it looks like he held his papers to a higher standard of review based on time in review.

ReplyDeleteAnon out of fear of retaliation.

In RIDD, his papers also have longest review times when compared to other papers...but the average latency from submission to acceptance in 2010 for papers THAT EXCLUDE Lancioni, Sigafoos, O'Reilly group is just under 8 days (counting first day of submission) with a range of 1-14 days.

ReplyDeleteSo, yea...maybe something funky here but the sexier topic of editor collusion appears to be unfounded. You can look for yourself on RIDD webpage.

From my own experience as a junior scholar, I will say that when AEs I respect ask me to review a paper I often turn it around in 24-48 hours to impress. Is there any way to disprove this wasn't happening in some of the quick turn arounds? Why not look into potential alternative explanations for this pattern instead of looking straight to nefarious? Isn't that good science Professor Bishop?!

Anon due to junior faculty status.

re to above: just under 8 days for papers accepted within 2 weeks of submission...there are of course much longer delays.

DeleteAnon due to junior faculty status

Prof. Bishop, I am guessing (though I could be wrong) that some of the folks targeted are trying to question your credibility by simply stating lies as if they are facts, knowing that most readers would not bother to take the hours of time, or if they might, deflecting to particular years that may not look quite so bad for the person posting.

DeleteIt's likely that many people who know there is truth in what's been posted have been involved with one or more of the named people. They are unlikely to speak up because they have likely been complicit, for a number of possible reasons, such as (a) having noticed these practices previously, but not stepped up due to relationships with these people or not wanting to be targeted for retribution (particularly junior people); (b) having benefited in some way (e.g., being included as a co-author, being given leadership positions like editorships by these people, having papers rapidly accepted); and (c) being their students or friends and being brought up in a culture that emphasizes that cheaters win (and seeing the accolades that the cheaters receive) and someone will do it, so you might as well get on board.

The bottom line is that there is simply no way that a peer review process could be happening when a large proportion of papers are received and accepted within a couple of days/week.

Furthermore, there is no way that people who regularly publish one or more articles a month are doing so while conducting quality research and/or are faking data. There simply isn't enough time in the day, even for people who have no lives, children, etc. outside of work.

Good work, Prof Bishop. Ill-posed comments from shills suggest you are right!

ReplyDeleteSure, if you ignore extant data that points to a contrary explanation. Just bad science.

DeleteLook, we can all agree that RASD and RIDD have historically been quick to send papers through the review process.

However, the authors defamed in this post do NOT appear to have had preferential treatment. Most everyone that has submitted to RASD & RIDD in 2010 & 2011 appears to have had preferential treatment in turns of quick, peer-review (until you prove otherwise). Matson was insanely quick moving these papers around-True.

Excellent questions are how the review process can be expedited while maintaining high standards and whether the system has accurately reported instances when revised papers were submitted late by an author, etc. but I wouldn't discount the possibility out of hand that an invested editor and his/her AEs and reviewers could pull off the extraordinary.

Can Bishop prove that fast thorough reviews are impossible? If it takes an experienced reviewer 3-4 hours to review a paper thoroughly, why can't that be pulled off in 48 hours? Procrastination likely....workload even more likely...but it is within the realm of possibility. That Bishop would rather cook the data to show a devious group behind the scenes seems to turn people on, but there are alternative explanations with data to back it up.

Don't blindly follow---review ALL of the data yourself before jumping on the bandwagon to condemn colleagues.

Anon junior faculty not a shill (AJFNS)

No, there is just no way that good peer review was occurring at those journals that quickly. I don't know that it can be proven, but I just don't think it is likely. I am in this field too. It only takes a few hours to read a paper well, but you need for all 3 reviewers to stop what they're doing and read that paper immediately, every time. It's just really unlikely that it was happening so expeditiously every time. It's certainly possible that everyone was getting such fast acceptance times, and that this set of scholars shouldn't be singled out. Still, those review times should very clearly signal that thorough peer review was not happening at RASD and RIDD at least, and we should be alarmed by that.

DeleteWhen I spoke to a colleague of mine about this, they told me that they already knew that something fishy was going on at RASD, and had stopped submitting there because they suspected that peer review wasn't happening. It's certainly tempting to ignore the problem of same day acceptance and keep submitting, but I think we need to hold ourselves to a higher standard. There's a temptation to be egotistical and believe that the same day acceptance is about scholarship and a quality product, but we have to avoid that temptation. Honestly, many of these papers are far from perfect, and could have been improved (or rejected) with peer review. Multiple baselines with only 2 tiers, systematic reviews that review only a handful of studies when many other qualify as eligible, blatant typos, etc.

It's not really on Bishop to prove anything. She's shined a light on some disturbing data and it is up to us as a community of scholars to figure out what that data is really showing, what our standards should be, and how we should hold scholars accountable.

Another anon junior faculty

"we need to hold ourselves to a higher standard"

DeleteI concur. And I think that this is the main concern here. We have a duty to other scientists (as well as to the general public) to produce good quality research. It's detrimental to the community to publish flawed research. And we know that there are a lot of flawed research studies out there (just choose a few studies at random!), and that these are being cited by people who don't have time to critically read the original papers. So we need to take peer review seriously. Credit should go to Bishop for spending time on this (she's not being paid to make enemies of other scientists!).

PS I'm not saying that any of Matson's studies are flawed, I'm just saying that we need to take peer review seriously.

DeleteBishop started this whole incident off because she was listed as an editor when she was not. Having said that, she accepts that she may have agreed to this.

ReplyDeleteIs it a good thing that journals list people who have not contributed as an associate editor (even if they have agreed in principle to do so)? No, it is not. Is that practice restricted to Matson's journals? It is not. But there is a focus on individuals here rather than taking a constructive approach to improving standards.

Similarly, I think that Neuroskeptic highlight some of the conflicts of interest with regard to Matson's work. It is important to note these but Matson is far from unique. There is no proper standard accepted across the sciences and he was hardly making a lot of money from the use of those tools. While it is useful to examine these practices, it is becoming more about the man (and his collaborators) rather than the relevant issues.

As for editors accepting papers without peer review, I think we can accept that this can be problematic if it is happening frequently, but given the journal reserves the right to do this, then all people need to do is publish elsewhere.

The above post is poorly researched. Bishop should apologies to the above editors if she did not contact them before publishing this post. Her description of their relationship as "cosy" is a semantic slander and she did not compare it to other reserachers working in the field. She also described some of the research as "case studies" which is an ignorant blunder. She is actually talking about single subject research designs. Likewise she describes the content as "slender" but at the same time questions how they can be reviewed quickly. In fields that use single subject research design frequently, replication and meta-analysis are of greater importance than in other types of research that focus on group designs and statistical control. Given the focus on replication and the fact that the authors may already be familiar with the original study the paper is based on, adequate peer review may be more efficient. This might not be the case, but if people like Bishop are going to throw these accusations out there, they need to be able to provide the evidence. By promoting her ignorant analysis of single subject research to her peers and the public, Bishop is damaging the reputations of good scientists like some of those mentioned above. I would recommend that she research before publishing such comments in the future. and since she clearly does not understand single subject research methodology works, she should start with this book:

http://samples.sainsburysebooks.co.uk/9781135593209_sample_509118.pdf

Finally, it would be useful if she used comparisions for the likes of Matson. For example, how does Matson compare to somebody like Simon Baron Cohen who has a similar level of influence?

Fred

No. My single subject studies and my reviews of single subject studies have spent much longer amounts of time (3 to 4 months generally) in the peer review process - just the first time around. That's just the time from submission to the first decision! In fact, I have one in review now that I submitted in OCTOBER and I still have not heard anything. Other single subject researchers that I've worked with have similar experiences. These acceptance times should alarm even single subject researchers.

Delete140 papers in 4 years. Hm… My whole big research project in linguistics is taking now over a year. And it ends with a one big journal paper. Co-authored, since we compare several languages in detail. So, having worked busy on language description, analysis and theory will essentially give me half a publication (1 journal paper divided by 2 coauthors) in MORE than a year. We MAY eventually carve out a little bit out of this huge project and have a smaller proceedings paper. This means I will have 1 publication in more than 1 year of working on a very complex set of data in a comparative perspective. Now, "140 publications in 4 years." What do they do? Type or do research?

ReplyDeleteThe two situations should not be compared. A single subject behavioral researcher with a good research infrastructure and doctoral students could have several quality single subject studies happening at one time.

DeleteIt's clear from the acceptance times that there wasn't peer review happening at these journals, but the speed with which single subject research can occur is a point that has been lost in this post.

Dear people who think I'm behaving badly. I have added a PPS to my blogpost.

ReplyDeleteCan you clarify if you contacted any of the people you discuss for an explanation before making these accusations?

DeleteMy first impulse, whenever I have what looks like a 'shoulder' in an otherwise-expontential decline, is to plot the numbers on a log scale.

DeleteOne of the people listed in Figure 1 has posted on a Facebook page that the figure contains inaccurate information about his editorship(s) and publications and those of another person listed there. It would have seemed prudent to obtain independent, objective verification -- dare I say peer review? -- of that information before disseminating it.

ReplyDeletePlease clarify what you mean by "this field." The journals publish articles on a wide range of research topics in autism and developmental disabilities by people from various disciplines.

I was watching that thread on the Facebook page quite closely. That comment wasn't up for long, as whoever runs the page took down the thread. It feels like someone wanted to stir up gossip, hurt some people, and shut things down as soon as someone who's being maligned had a chance to defend themselves in the same space where the rumors swirled. Wonder what the motive is there?

DeleteI'm not one of the people mentioned anywhere on this page, but more than one of my coauthors are being dragged through the mud. The inaccuracies in facts and figures, combined with a lack of evidence of any actual conspiracy, makes me hesitate to take this whole thing nearly as seriously as I otherwise might. I dislike the idea that my scholarship might be devalued due to the perception of wrongdoing by those around me. However, I know I did not engage in any dishonest practices. The work I did was legitimate. I can't speak for others' actions, but I never had a reason to believe my coauthors were misbehaving, either.

I'm staying anonymous for extremely obvious reasons.

But what inaccuracies? Specify them please!

DeleteI'm referring mainly to the inaccuracies pointed out in the Facebook post that the previous anonymous referenced. They're easily verifiable facts. But I'm not going to help make this post more credible. I'll let my coauthor(s) do that, if they wish to defend themselves here, though I wouldn't blame them for not wanting to respond in this space.

DeleteSince everyone is being very vague about what it is in the facebook post, it is very difficult to know what to go verify.

DeleteA desperate attempt from Oxford Professor Behaving Badly Bishop to save face. I'm sure the authorities at Oxford University and The British Psychological Society will take these into account in their investigation.

ReplyDeleteEverybody:

ReplyDeleteIt is your personal responsibility to report this misconduct of Oxford Professor Behaving Badly Bishop to Oxford University and The British Psychological Society. Do not rely on someone else to complain. This type of behavior cannot be tolerated in our discipline.

"Sam":

DeleteIt is your personal responsibility to point out where you think the blog post is inaccurate and how the data has been "misconstrued". In other words (given that you're likely one of the people named), defend yourself.

Tim Salomons

People should also investigate the funding streams for her research programs and complain directly to them. My concern that the bad science here is manifest elsewhere in the work of Oxford Professor Behaving Badly Bishop.

ReplyDeleteContact details of her funding sources to come.

To save you time, it's the Wellcome Trust.

DeleteYou rock

Delete"Sam," you're so angrily going after Professor Bishop for pointing out facts and providing evidence and clarity regarding from where she is gathering her information, and providing her name and putting her reputation on the line. You're making unfounded accusations and hiding in anonymity... it seems clear that you are one of the people named. You're not the least bit credible.

ReplyDeleteThis page shows the Average Review to Final Decision time for RASD. In 2014, it was 7 weeks.

ReplyDeletehttp://journalinsights.elsevier.com/journals/1750-9467/review_speed

I'm not sure how to reconcile this with the suggestion by some commenters here that RASD just has a very fast turnaround.

Given that RASD has electronic online submission and review, it would be very straightforward for Elsevier to investigate the extent to which papers accepted by RASD have actually been sent for review. I trust that such an investigation is underway. If the journal is to have any credibility going forward, then the results of this investigation need to be made public.

There's a broader point though. It's far from perfect but peer review is our first line of defence against pseudoscience. This is particularly important in a field such as autism research, where there are so many dubious claims of causation and treatment. But there needs to be trust. As consumers of journal articles, we're assuming that articles published in a peer reviewed journal have actually undergone peer review.

To my mind, this shows that, for the peer review process to have continuing credibility, all journals should be publishing the peer reviews alongside accepted articles. Whether this is done anonymously or not is a separate question. However, PeerJ, the new open access journal, have shown that this can work very well.

http://blog.peerj.com/post/43139131280/the-reception-to-peerjs-open-peer-review

COI: I'm an Academic Editor at PeerJ

"all journals should be publishing the peer reviews alongside accepted articles. Whether this is done anonymously or not is a separate question."

DeleteI love this suggestion. I commented elsewhere that I thought that the names of the referees should be included with any accepted (and hence published) paper - as they do in Frontiers I think. This may make people take the review process more seriously. But now I realise that this might be a bad idea - if a reviewer complicates and delays publication by asking for major (rather than minor) revision, then they *may* make enemies of other scientists. So alas perhaps it should remain anonymous, which I think is a shame for transparency.

Yeah, if my name were attached to my reviews it would inhibit objectivity.

DeleteSam, and other Anonymous posters in the same vein

ReplyDeleteAs the saying goes, 'When in a hole, stop digging!'

These comments only serve to ratchet up the 'rotten-smell-o-meter' up a notch.

One the one hand, you have a transparent approach from Dorothy: explicitly identifying the questions, the methods and sources and the results. On the other? So far, innuendo, ad hominem attacks, dissembling and diversion.

You can draw your own conclusions. I know I will.

John Towse

The data presented by Dr. Bishop appears accurate. The interpretation of the data is suspect. First, Dr. Bishop shows that this group has quick turn around with publications. OK. But that seems to be the norm. Then she shows that these people publish a lot. OK. But they publish a lot in these and other journals. They have published a lot prior to and after they were editors in these particular journals. These figures can be interpreted with innocence and praise (great research programs and great journals) or with malice (bad things happening here). There does seem to be cherry picking going on here by Dr. Bishop. I suggest an end to the bitch and moan fest. Let the proper authorities deal with this.

ReplyDeleteIt also appears as I look at Dr. Bishop's blogs that she has a history of playing "got ya". Maybe she is up to her neck in crap.

False.

DeletePubmed search for Sigafoos, Jeff[Author]. No limits on publication time. Total of 182 articles. Set items per page to 200. Export as XML (summary > xml). Use your favourite tool to explore data. Count number of papers in same journal. Top number is 94 papers on RIDD, i.e. more than 50% of all his articles are published in the same journal. Then 13 papers in "Perceptual and motor skills", 11 in "Journal of applied behavior analysis". Skewed distribution -> Fishy.

Repeat the same for Dorothy Bishop. 119 papers. Max number of papers published on same journal is 10 ("International journal of language & communication disorders") i.e. ~8%; 9 in Developmental science, 8 in Plos One. Not skewed distribution -> Not fishy.

Repeat same for Jon Brock. 37 papers of which max number on same journal is only 4 (Quarterly journal of experimental psychology) i.e. ~10%. Not skewed distribution -> Not fishy.

Shall we do a pubmed query also for your Surname, Name[Author] dear Susan?

Anonymous female PhD student who likes to play with data and is too young to get enemies.

PS: For the lazy ones, I can provide XML data and how to get numbers above but it's so simple that anyone can do it and verify my quick results.

If the data appears accurate then the suggestion that the fast turn around is the norm is cause for concern (as noted) given that the publishers claim that these papers are adequately peer-reviewed. This is independent of any work done by these authors elsewhere (good or bad).

ReplyDeleteStatement of conflicts of interest:

I am an academic psychologist and journal associate editor but I do not work in this field. I am acquainted with Professor Bishop but do not know the other named individuals. I am the parent of a child with a severe autistic specturm disorder.

How about the glut of papers suddenly published in RIDD and RASD in 2015. There are 4-5 volumes for each journal. Not issues, volumes. The turn around times seem to be much longer than recent turn around times for those journals. Proportionally, almost none are by the authors listed above. This seems to confirm the idea of preferential treatment. Where did all of these articles come from?

ReplyDeleteGreat paper Dorothy.

ReplyDeleteJust an anecdote: this morning I happened to be reading this paper published in RIDD:

http://www.sciencedirect.com/science/article/pii/S0891422215000037#

Interestingly, the reference list comes in two parts: References, and then Further reading. In the latter section, the authors seem to be allowed to paste just about anything, and indeed they have indulged in about 20 references to Lee Swanson's papers!

I am not saying that there is anything dishonest here, beyond a bit of excessive self-publicity. After all, why would they not do it, if that section allows them to advertise all their favourite papers?

But this begs the question of the point and consequences of this dual reference list: how long has it been there in RIDD? is it present in the other journals being discussed? Are references in that Further reading section indexed as bona fide references like those in the first section? Have they been used for the purpose of dubious citations to selected authors and journals?

Maybe this Further reading section is in itself both an encouragement and a mechanism for bad citation practices.

Regarding the time from submission to acceptance - many journals take steps to artificially shorten this. E.g. they no longer accept papers pending "major revisions" or "minor revisions" – but instead reject papers with an "invitation to resubmit" so when the revised manuscript turns up it is registered as a new submission.

ReplyDeleteThis is done specifically to drive down the submission to acceptance time - so the journal can boast about its fast publication times.

I’ve seen a paper that was in the journal peer-review system for more than a year, eventually show up in print with a claimed submission to acceptance time of just a few weeks.

No idea if this applies the journals discussed here, and obviously this can’t explain acceptances within one day, but worth bearing in mind when looking at the data.

Except that RIDD papers have days for submitted with revisions in addition to submitted (originally) and final acceptance. That is, for papers that actually were required to revise. So, no, the artificial time for a "reject and resubmit" isn't in practice in this case. This info is readily available in the article PDFs.

DeleteIt would be even more bizarre and equally problematic if the editors of the journal were rejecting and then immediately accepting their own articles to artificially reduce the time between submission and acceptance for their own papers.

DeleteEcho chamber...

ReplyDeleteSelf-citation seems the opposite of reproducible. Look here....I agree with myself 5 times more often than my peers.I know all you scientists are obsessed with peer review, but we common people just see the ego.

what does this even mean?

DeleteI can't even...

DeleteI have been following the developments on this blog closely, and I have been baffled, amazed and saddened. Firstly I am saddened by the clear evidence that the system has been ‘gamed’. Secondly, I am saddened by the way that some have sought to spread the blame around, or smear the critic. For me, this latter tactic has only served to underline the seriousness of what appears to have transpired at these journals.

ReplyDeleteThis appears to be far larger than simply bad behavior at the journals. It points to a ridiculous level of cheating among a large group of people (those named and their colleagues) to a degree that will lead to serious questions about the legitimacy of their research, as well as particular interventions that have been deemed to be scientifically supported heavily due to these authors. I'm shocked this hasn't been picked up yet by a wider audience.

ReplyDeletehttp://www.timeshighereducation.co.uk/news/journal-editors-self-citation-rate-under-scrutiny/2018713.article

DeleteFaculties deserve to be "cheated" when they substitute metrics like h-index for a proper review of a colleague's work.

ReplyDeleteDo civilians, patients depending on scientists to do their job properly, and tax payers also deserve to be cheated?

DeleteI'm far from the science so h-index is something new for me, thanks for that :)

ReplyDeleteIt's common for people to 'optimize' a metric once everyone rely on that rather than the fact behind that. And if people do that - they're incentivized by the system in one or another way

That's why we need editors to scrupulously enforce standards: As I explained in my post, it's not usually so easy to game H-index, but if an editor disregards peer review and encourages numerous papers by same authors, and does not challenge high rate of self-citation, then you have a winning formula for gaming of Hindex

DeleteFurther, if you are listed as a co-author on hundreds of papers without having contributed, your h-index will be high, though little of that could be attributed to your own work.

DeleteI can't read all these comments - but I appreciate the discussion.

ReplyDeleteI agree there are/were problems at some journals. However, there is a lot to commend rapid peer review - as long as it happens! It is a terrible shame to wait 6 to 9 months to get a review and find out a paper is rejected. Then submitting it to another journal, and the information is a year or more out of date. Assuming journal #2 give an R&R and then accepts it, by the time its published, the study was conducted 2 years or more previous. That's a shame.

We need journals where peer review (as it is supposed to be) happens within a month. In full disclosure, I have published in RASD twice, one paper was an R&R and the revision was accepted same-day. The other paper was accepted within 6 weeks.

Faster would be better, and your points about lengthy reviews is right. It simply shouldn't take 6 months. 1 month is certainly enough. But one day?! Two days?! It should set off some alarm bells.

DeleteAs a note - the story I provide is a real one (waiting 7 months for a review at another journal, not having the paper accepted, and then going to RASD with out of date info - but it was for a review so we just updated the lit search).

DeleteI agree 1 to 2 days should set off alarms, particularly for the first round of reviews. But I think its harder to criticize 1 week after a resubmission.

Hi Dorothy, I'm posting this because you may have missed me earlier comment.

ReplyDeleteCan you clarify if you contacted the researchers you named above before publishing this post?

Sorry Niall. No I didn't.

DeleteThanks Dorothy.

DeleteWas that an oversight or do you not think that Sigafoos, O'Reilly and Lancioni were not entitled to an opportunity to reply to these accusations before they were published?

In journalism, most codes of ethics place an obligation on investigating journalists to do their utmost to offer a fair right of reply. I know many bloggers do not consider themselves journalists but I think that bloggers should adhere to similar ethical standards.

Did you consider that if you suspected your fellow psychologists of unethical practices, it might be better to attempt to resolve the situation informally or by making a complaint to the relevant professional organisations?

It's clear that you've put a lot of effort into researching many aspects of their work so I'm curious as to your reasoning behind publishing the accusations without offering a right of reply, attempting to resolve the situation informally or making a complaint to a relevant professional organisation.

Ultimately, time will tell if the allegations are substantial but I am very uneasy with the somewhat sensational approach to addressing concerns around editorial processes.

A blog is different than a journal article. For one, any of the people named can respond directly. It's obvious that at least one of them has "anonymously." Matson has responded in other contexts. Their response appears to be to deny any wrongdoing. Prof. Bishop hasn't deleted any responses (including those with contact information for complaints to her supervisors), so the accused are free to post whatever they want.

DeleteI'm not talking about the standards of academic journals Anon. I'm referring to journalism - online or otherwise.

DeleteAfter all, you could well say that if somebody wrote an article in The Guardian about you claiming that you were a thief, you were free to write a letter and the Guardian would most likely publish it. Perhaps you believe that standard is sufficient but journalists typically contact the subject of an investigation for comment before publishing any allegations. This is because they, and the courts, have recognised that mud sticks and even if the matter is cleared up later and the newspaper publishs an apology or correction, reputations have been damaged. In this scenario, Sigafoos, O'Reilly and Lancioni may be able to offer explanations for the data offered by Bishop, but even if they do that, their reputations will remain damaged - any victim of false allegations will tell you as much.

I don't see how it is "obvious" that some of these individuals have replied here. That's mere speculation.

Thanks Niall for your comment. I'm afraid I disagree. This is not journalism. In the world of blogging, errors are very rapidly corrected. If a blogger makes an argument that is demonstrably false, then people will pile in to issue a correction, and it will be the blogger who is left with their reputation in tatters.

DeleteSo far, the arguments against my blogpost have been of three kinds:

a) Questioning my motivation and competence and threatening to inform my Vice Chancellor, the British Psychological Society and the Wellcome Trust of my bad behaviour. All of these will take seriously such complaints and if they are justified, then the mud will stick to me. I note that you are continuing this line of argument by attempting to accuse me of wrongdoing, rather than addressing the abnormal editorial practices that are clearly documented by the data I have presented.

b) Questioning the data. It's been argued that, for instance, the data on lag between submitting and accepting a paper may not always be accurate. While that is true, for it to be a plausible explanation of the observed data, it would have to be the case that somehow such errors affected virtually all the papers whose data contributes to Figure 2. That is not plausible. The additional analysis comparing acceptance times for Sigafoos with those for a matched sample of other papers is not explicable in this way.

c) Questioning whether it is indeed unacceptable for editors to publish in their own journals and for an editor-in-chief to accept papers by colleagues without peer review. While I would not argue that such behaviour is always wrong, the sheer scale on which this has occurred here is breathtaking. My view is that it simply reinforces that we have a massive problem if people think such behaviour is defensible. My view is in line with published guidelines by the Committee for Publication Ethics - see http://publicationethics.org/resources/guidelines, which explicitly discuss these matters.

If there are other innocent explanations for three senior academics to co-author 140 papers in a period of 4 years (that's about one every 10 days), half of them in a journal where they appear to have been accepted without peer review, I'd be very interested to hear them. And I would be more than willing to feature and publicise such an explanation on my blog. And to apologise publicly if a convincing explanation is given.

Just to say that, in response to some earlier comments, I have now added an analysis that explicitly compares acceptance speed for Sigafoos/O'Reilly/Lancioni papers in RASD with speed for a control set of authors. It's shown as PPPS, dated 7th March.

ReplyDeleteI am joint editor of IJLCD. Just wanted to make 2 comments on the practical side of editing. Firstly, as to why the 48 hour turn around is not plausibly a peer review. To do this: i) the journal admin needs to receive the submission and complete the submission checklist and assign to the editor on the day it arrives (usually 2-4 days) ii) the editor also needs to pick up the submission on the day it arrives and select reviewers immediately (usually 3-5 days) ii) the reviewers would then need to respond to that email accepting review within 24 hours (usually around 7-10 days in my experience) iii) the reviewers would then have to read and write up comments and post back into the system that same day (usually 4 weeks) iv) those reviews would both have to have no suggested amendments, not even minor ones v) the editor would need to be waiting for those reviews so that when they came in he/she had time to read through the paper, checking the reviewers had not missed anything (usually about 7 days) vi) the editor would need to then accept the paper outright. Even if all this were in place which of course is extremely unlikely, it also relies on reviewers from the same time zone which is not best practice. Second, editors are usually highly experienced researchers who are aware of normal process in the field and of the public scrutiny they are under. Journals, after all, largely rely on reputation for submissions. For example, at IJLCD our policy is always that we are *more* stringent with any editor-authored papers - a revise and resubmit recommendation from reviewers has often been treated as reject in this instance. At the very least therefore, it is interesting that some editors do not seem to be monitoring this more carefully and checking both their self-publications and their peer review process, in case they are seen as unusual, biased or unreasonable for the field.

ReplyDeleteWhile I would never wish a complaint (no matter how misguided) against an esteemed scientist such as Professor Dorothy Bishop, anonymous commentators might want to bear in mind that the University of Oxford is not going to get too excited about anonymous complaint letters. Bit of a dilemma that.

ReplyDeleteMost academics slog very hard for every ounce of their h(umble)-index and this sort of gaming of the system shames individuals and journals, and makes the playing field even more uneven.

We should all be immensely grateful that Dorothy Bishop has diverted time and energy from her own publication profile to expose practices that are not bearing up at well under scrutiny.

I hope they would take anonymous complaints seriously if the complaints included evidence of wrongdoing (of which I see none by DB).

DeleteIn any institution there should be a route for anonymous whistleblowing by junior staff, students and so forth who might otherwise not be heard.

Not an academic, but enjoying the show.

ReplyDeleteMany thanks to DVB for lighting the first candle.

Do hope someone puts this all together in a non peer-reviewed publication to extend the illumination.

Ex Latimer & Ridley

Professor Bishop as an early career researcher and with all the respect, I would like to point out that in the world of blogging it may still be good practice to first contact people before you name and shame them. Given that very often you are referring to the work of Ioannidis, why don't you prefer the peer review route to help with identifying the wrong doing in science? What about this title for your next blog 'Academics and ethical approach to blogging: are there limits?'

ReplyDeleteI am a patient person, but I am beginning to become exasperated by this line of argument. Exactly what difference do you think it would have made if I had contacted Sigafoos/O'Reilly/Lancioni before posting my blog? The facts are as I have stated. Nobody has disupted that. Do you think that if I had contacted them in advance that they would have come up with some convincing explanation as to why they had behaved as they did? Nobody has produced such an explanation since I posted my blog. All I have heard is attempts to justify their behaviour as if there is nothing wrong.

DeleteIt is deeply worrying that you, as an early career researcher, apparently think that there can be justification for publishing loads of papers in journals where you have an editorial role. It does seem that the researchers who do this have had a pernicious influence on their junior colleagues, such that they fail to see just how out of line this behaviour is.

By the time you got on to Ioannidis and peer review, the thread of your logic was beginning to defeat me. You want me to embrace peer review. I do. My scientific papers are published in peer-reviewed journals where the turnaround time is typically a matter of weeks or months rather than days, because I get reviews, respond to them in a revision and often then get re-reviewed. In normal science this is what happens. You don't seem to grasp that.

This, on the other hand, is a blog, which is a different format. It is a very useful format for controversial topics as it provides ample opportunity for debate - very much in line with the kind of post-publication review that Ioannidis recommends - see http://www.vox.com/2015/2/16/8034143/john-ioannidis-interview. I can confirm here that I have not deleted any comments, so the arguments you see here are the best that people can muster. I, and most readers, fail to find any adequate justification for behaviour which clearly contravenes the Committee on Publication Ethics* code of conduct and best practice for editors (downloadable from here http://publicationethics.org/resources/guidelines), among which are the following:

• ensure that all published reports of research have been reviewed by suitably qualified reviewers (e.g. including statistical review where appropriate)

• ensure that non-peer-reviewed sections of their journal are clearly identified