Despite all its imperfections, peer review is one marker of scientific quality – it indicates that an article has been evaluated prior to publication by at least one, and usually several, experts in the field. An academic journal that does not use peer review would not usually be regarded as a serious source and we would not expect to see it listed in a database such as Clarivate Analytic's Web of Science Core Collection which "includes only journals that demonstrate high levels of editorial rigor and best practice". Scientists are often evaluated by their citations in Web of Science, with the assumption that this will include only peer-reviewed articles. This makes gaming of citations harder than is the case for less selective databases such as Google Scholar. The selective criteria for inclusion, and claims by Clarivate Analytics to take research integrity very seriously, are presumably the main reasons why academic institutions are willing to pay for access to Web of Science, rather than relying on Google Scholar.

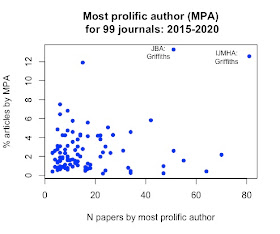

Nevertheless, some journals listed in Web of Science include significant numbers of documents that are not peer-reviewed. I first became aware of this when investigating the publishing profiles of authors with remarkably high rates of publications in a small number of journals. I found that Mark Griffiths, a hyperprolific author who has been interviewed about his astounding rate of publication by the Times Higher Education, has a junior collaborator, Mohammed Mamun, who clearly sees Griffiths as a role model and is starting to rival him in publication rate. Griffiths is a co-author on 31 of 33 publications authored by Mamun since 2019. While still an undergraduate, Mamun has become the self-styled Director of the Undergraduate Research Organization in Dhaka, subsequently renamed as the Centre for Health Innovation, Networking, Training, Action and Research – Bangladesh. These institutions do not appear to have any formal link with an academic institution, though on ORCID, Mamun lists an ongoing educational affiliation to Jahangirnagar University. His H-index from Web of Science is 11. This drops if one excludes self-citations, which constitute around half of his citations, but nevertheless, this is remarkable for an undergraduate.

Of the 31 papers listed on Web of Science as coauthored by Mamun and Griffiths, 19 are categorised as letters to the Editor. Letters are a rather odd and heterogeneous category. In most journals they will be brief comments on papers published in the journal, or responses to such comments, and in such cases it is not unusual for the editor to make a decision to publish or not without seeking peer review. However, the letters coauthored by Griffiths and Mamun go beyond this kind of content, and include some brief reports of novel data, as well as case reports on suicide or homicide gleaned from press reports*. I took a closer look at three journals where these letters appeared to try and understand how such material fitted in with their publication criteria.

The International Journal of Mental Health and Addiction (Springer) featured in an earlier blogpost, on the basis of publishing a remarkably high number of articles authored by Griffiths. In that analysis I did not include letters. The journal gives no guidelines about the format or content of letters, and has published only 16 of them since 2019, but 10 of these are authored by Griffiths, mostly with Mamun. As noted in my prior blogpost, the journal provides no information about dates of submission and acceptance, so one cannot tell whether letters were peer-reviewed. The publisher told me last July and confirmed again in September that they are investigating the issues I raised in my last blogpost, but there has to date been no public report on the outcome.

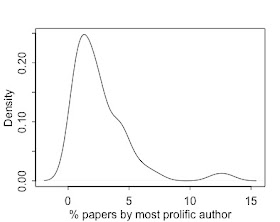

Psychiatry Research, published by Elsevier, is explicit that Case Reports can be included as letters, and provides formatting information (750-1000 words or less, up to 5 references, no tables or figures). Before 2019, around 2-4% of publications in the journal were letters, but this jumped to an astounding 22.4% in 2020, perhaps reflecting what has been termed 'covidization' of research.The Asian Journal of Psychiatry (AsJP), also published by Elsevier, provides formatting information only (600-800 words, 10 references, 1 figure or table), and does not specify what would constitute the content of letters, but it publishes an extremely large number of them, as shown in Figure 1. This trend started before 2020, so cannot be attributed solely to COVID-mania.

Figure 1: Categorization of documents published in Asian Journal of Psychiatry between 2015 and 2020.

For most journals, letters constitute a negligible proportion of their output, and so it is unlikely to have much impact whether or not they are peer reviewed. However, for a journal like AsJP, where letters outnumber regular articles, the peer review status of letters becomes of interest.

The only information one can obtain on this point is indirect, by scanning the submission and acceptance dates of articles to see if the lag between these two is so short as to suggest there was no peer review. Relevant data for all letters published in AsJP and Psychiatry Research are shown in Table 1. It is apparent that there is a wide range of publication lags, some extending for weeks or months, but that lags of 7 days or less are not uncommon. There is no indication that Mamun/Griffiths have favourable treatment, but they benefit from the same rapid acceptance rate as other letters, with 40-50% chance of acceptance within two weeks.

Table 1: Proportions of Letters categorized by publication lag in two journals publishing a large number of Letters.

Thus an investigation into unusual publishing patterns by one pair of authors has drawn attention to at least two journals that appear to provide an easy way to accumulate a large number of publications that are not peer-reviewed but are nevertheless cited in Web of Science. If one adds a high rate of self-citation to the mix, and a willingness of editors to accept recycled newspaper reports as case studies, one starts to understand how it is possible for an inexperienced undergraduate to build up a substantial number of citations.I have drawn this situation to the attention of the integrity officers at Springer and Elsevier, but my previous experience with the Matson publication ring does not instil me with confidence that publishers will take any steps to monitor or control such editorial practices.

Clarivate Analytics recently published a report on Research Integrity, in which they note how "all manner of interventions and manipulations are nowadays directed to the goal of attaining scores and a patina of prestige, for individual or journal, although it may be a thin coat hiding a base metal." They described various methods used by researchers to game citations, but did not include the use of non-peer-reviewed categories of paper to gain numerous citations. They describe technological solutions to improve integrity, but I would argue they need to add to their armamentarium a focus on the peer review process. The lag between submission and acceptance is a far from perfect indicator but it can give a rough proxy for the likelihood that peer review was undertaken. Requiring journals to make this information available, and to include it in the record of Web of Science, would go some way to identifying at least one form of gaming.

No doubt Prof Griffiths regards himself as a benign influence, helping

an enthusiastic and energetic young person from a low-income country

establish himself. He has an impressive number of collaborators from all over the world, many of whom have written in his support. Collaboration between those from very different cultures is generally to be welcomed. And I must stress that I have no objection to someone young and inexperienced making a scientific contribution - that is entirely in line with Mertonian norms of universalism. It is the departure from the norm of disinterestedness that concerns me. An obsession with 'publish or perish' leads to gaming of publications and citation counts as a way to get ahead. Research is treated like a game where the focus becomes one's own success rather than the advancement of science. This is a consequence of our distorted incentive structures and it has a damaging effect on scientific quality. It is frankly depressing to see such attitudes being inculcated in junior researchers from around the world.

*Addendum

Letters co-authored by Mamun and Griffiths based on newspaper reports (from Web of Science). Self-cites refers to number of citations to articles by Griffiths and/or Mamun. Publication lags in square brackets calculated from date of submission and date of acceptance taken from the journal website. The script used to extract these dates, and .csv files with journal records, are available on: https://github.com/oscci/miscellaneous, see Faux Peer Review.rmd

Mamun, MA; Griffiths, MD (2020) A rare case of Bangladeshi student suicide by gunshot due to unusual multiple causalities Asian Journal of Psychiatry, 49 10.1016/j.ajp.2020.101951 [5 self-cites, lag 6 days]Mamun, MA; Misti, JM; Griffiths, MD (2020) Suicide of Bangladeshi medical students: Risk factor trends based on Bangladeshi press reports Asian Journal of Psychiatry, 48 10.1016/j.ajp.2019.101905 [2 self-cites, lag 91 days]

Mamun, MA; Chandrima, RM; Griffiths, MD (2020) Mother and Son Suicide Pact Due to COVID-19-Related Online Learning Issues in Bangladesh: An Unusual Case Report International Journal of Mental Health and Addiction, 10.1007/s11469-020-00362-5 [14 self-cites]

Bhuiyan, AKMI; Sakib, N; Pakpour, AH; Griffiths, MD; Mamun, MA (2020) COVID-19-Related Suicides in Bangladesh Due to Lockdown and Economic Factors: Case Study Evidence from Media Reports International Journal of Mental Health and Addiction, 10.1007/s11469-020-00307-y [10 self-cites]

Mamun, MA; Griffiths, MD (2020) Young Teenage Suicides in Bangladesh-Are Mandatory Junior School Certificate Exams to Blame? International Journal of Mental Health and Addiction, 10.1007/s11469-020-00275-3 [11 self-cites]

Mamun, MA; Siddique, A; Sikder, MT; Griffiths, MD (2020) Student Suicide Risk and Gender: A Retrospective Study from Bangladeshi Press Reports International Journal of Mental Health and Addiction, 10.1007/s11469-020-00267-3 [6 self-cites]

Griffiths, MD; Mamun, MA (2020) COVID-19 suicidal behavior among couples and suicide pacts: Case study evidence from press reports Psychiatry Research, 289 10.1016/j.psychres.2020.113105 [10 self-cites, lag161 days]

Mamun, MA; Griffiths, MD (2020) PTSD-related suicide six years after the Rana Plaza collapse in Bangladesh Psychiatry Research, 287 10.1016/j.psychres.2019.112645 [0 self-cites, 2 days]